➣ Shiyu Jia & Zhenkuan Pan

Surgery simulation simulates surgery processes in a computer-generated virtual environment. It is an application of virtual reality technologies in the field of medicine. Non-real-time surgery simulations are usually used for surgery planning while real-time surgery simulations are used for surgery training. Compared to conventional surgery training methods, such as using corpses and dummies, realtime surgery simulation is more cost-effective, can be more immersive and interactive, can be repeated unlimited times without any real harm, and can easily adapt to different physiological parameters of different patients. Our research focuses on designing and implementing mathematical models and algorithms for real-time surgery simulation, including deformation of deformable objects, user interaction with haptic devices and simulating surgical operations such as cutting.

We have developed a surgery simulation software system using C++. The system is composed of several modules, including user interface, a graphics renderer, haptic device manager, collision detection, deformation calculation, cutting tool, touching and grasping tool. The system is designed to be multi- threaded, and thus can take advantage of multi-CPU or multi-core CPU. Graphics rendering is implemented using OpenGL 2.0 with GLSL shaders and currently PHANToM haptic devices from SensAble Technologies are supported using GHOST SDK. The haptic update loop is implemented in a separate thread to decouple it from collision detection and deformation calculation.

To facilitate algorithm implementation, we designed a sophisticated data structure to store geometrical, topological and mechanical information of a target object. The object is represented by tetrahedral mesh. The mesh is composed of vertices, edges, triangular faces and tetrahedrons. Each element has relational information about its neighboring elements. For example, each edge has two pointers pointing to its two vertices and a list of pointers pointing to tetrahedrons that contain this edge. Each tetrahedron also stores mechanical properties such as density, Young’s modulus and Poisson’s ratio.

Detecting collisions between surgical tools and the target object is the first step of interaction. We use AABB tree for the target object. Since the object can deform, the AABB tree needs to be updated in real-time. To reduce computational cost, a top-down updating algorithm is implemented. Each AABB is enlarged by a small amount so that updating is not needed if all the vertices still lie within its bound. The touching and grasping tool is treated as a point while the cutting tool is treated as a line segment. Once collision is detected, the penetration depth is used for calculating interaction force. This force is applied to the object surface for deformation while the opposite force is sent to the haptic device. For the cutting tool, the cutting process begins once the interaction force exceeds a certain limit, and an alternative neighboring search algorithm using spatial coherence is used for collision detection instead of AABB tree.

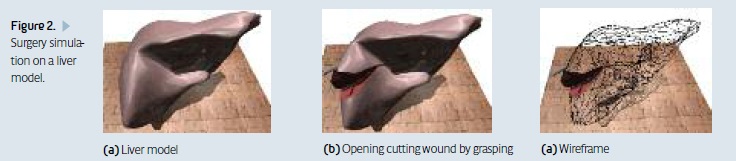

Target objects in surgery simulation are usually human tissues and organs. These objects deform considerably under external force, and are thus referred to as “deformable objects.” Simulating deformation of deformable objects is what differentiates surgery simulation from other virtual reality applications involving only rigid bodies. Deformation calculation usually consumes a large amount of computational time, so efficient algorithms and implementations are essential to meet real-time requirements. We implement two deformation algorithms, both based on the Finite Element Method. One algorithm pre-computes deformation modes under unit force vectors and uses super-position to calculate displacements of vertices under external forces. The other algorithm is based on a tensor-mass model. The stiffness matrix is distributed to vertices and edges as tensors while the mass of each tetrahedron is distributed to its vertices. The algorithm dynamically calculates changes of velocities and positions of vertices and is computationally more intensive, but does not need pre-computation.

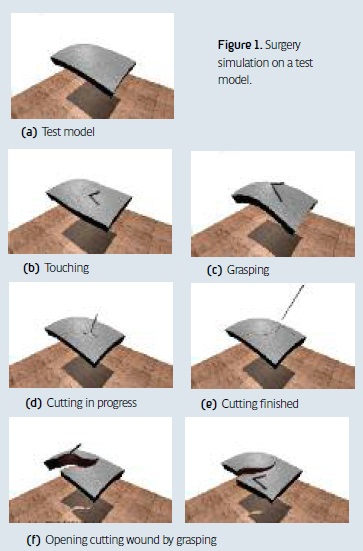

Cutting is one of the most basic operations in surgery. To make cutting realistic, the cutting wound has to form gradually following the cutting tool movement in the target object. The consistency of the mesh data structure has to be maintained during cutting. We implement two cutting methods. The first one uses a minimal element subdivision method to split tetrahedrons swept by cutting tools into smaller tetrahedrons. This method requires classification of tetrahedron cutting state and can sometimes run into invalid states due to irregular movements of the cutting tool. The second method forgoes cutting state classifications. It is divided into two stages–subdivision and splitting. In the subdivision stage, tetrahedrons swept by the cutting tool are subdivided to eliminate face and edge intersections. No cutting wound is formed in this stage, but after subdivision, the cutting path is composed solely of tetrahedron faces. In the splitting stage, these faces on the cutting path are split into two to form cutting wound.

Our future research will focus on the following aspects:

- Simulating sewing operation. This involves simulating interaction between the needle, suture and target objects, as well as deformation and knot tying of the suture.

- Interaction between two deformable objects. Currently we can only simulate interaction between one surgical tool and one target object. Real surgical operations often involve multiple tissues and organs. Collision detection and response algorithms for two deformable objects are more difficult to design than two rigid bodies since both objects can deform, and deformation affects collision results conversely.

- Non-linear deformation. Our current deformation algorithms use linear-elasticity. But real human tissues are highly non-linear and anisotropic. Non-linear finite element theory is much more complex than linear finite element. Also super-position principle no longer applies in non-linear case. Designing efficient non-linear deformation algorithms is a challenge.

- Using GPGPU. GPU has been used in general- purpose computation for quite some time now. With the recent driver support from both NVidia and AMD, using OpenCL to write platform- independent programs to directly harness the parallel processing power of GPU without going through the rendering pipeline finally becomes a reality. There has been very little research on using GPGPU in surgery simulation. We will explore the possibility of using GPGPU to accelerate deformation and collision detection algorithms of deformable objects.

Shiyu Jia, Ph.D.

Zhenkuan Pan, Ph.D.

Qingdao University

P.R. of China

shiyu.jia@yahoo.com.cn

About Brenda Wiederhold

President of Virtual Reality Medical Institute (VRMI) in Brussels, Belgium.

Executive VP Virtual Reality Medical Center (VRMC), based in San Diego and Los Angeles, California.

CEO of Interactive Media Institute a 501c3 non-profit

Clinical Instructor in Department of Psychiatry at UCSD

Founder of CyberPsychology, CyberTherapy, & Social Networking Conference

Visiting Professor at Catholic University Milan.