By Andreas Pusch & Frank Steinicke

Mixed Reality (MR) display and tracking technologies, as well as associated interaction techniques, evolved rapidly. Recently, they have begun to spread into the mass market and now enable a variety of promising healthcare applications, some of which are already being applied in medical practice.

The current streams in healthcare can be summarized as follows:

On the one hand, there are systems in which synthetic multimodal head-up information is overlaid on tracked real objects (e.g., patient history and rehabilitation progress data, or specific information attached to certain body parts) while others permit direct guidance and training of surgeons and therapists by means of interactive co-located real-time simulations (e.g., visuo-haptic remote navigation of inspection or surgery equipment, annotations of regions to operate on, 2-D/3- D visualizations of hidden organs and structures). In the context of mental and motor disorder treatment, patients are presented with highly controllable MR stimuli (e.g., protocols for cognitive and sensorimotor function assessment, [self-]perceptual illusions, and phobic or otherwise mentally or emotionally stressing situations) whose responses are monitored and evaluated.

Our research focuses on static and dynamic manipulations of a user’s perception and action during body and limb movement, locomotion, as well as during interaction with multimodal virtual and augmented real environments. Within Milgram’s reality-virtuality continuum, it is “Augmented Virtuality” (AV) that fits our methodology best, because we bring the real to the virtual rather than the other way around (the latter being called Augmented Reality, AR). That is, we seek to transfer the user’s body to the virtual world – and there gain full control over its multimodal manifestation.

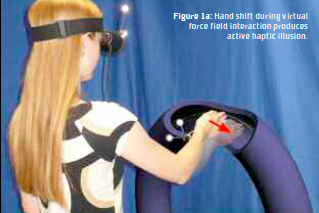

In 2011, we have presented a system that is capable of introducing visuoproprioceptive conflicts at the hand and lower arm levels in a very flexible way. Video of a user’s limbs is first captured using stereo cameras built into a head-mounted display (HMD) and then embedded into a purely virtual world.

This self-representation can be shifted in 3-D and, though not reported so far, be scaled in place and shaded differently while interacting with virtual objects or avatars. As shown in Figures 1, the system proved useful for self-perception studies and the evocation of haptic illusions. But it can do more: Unconscious hand movement guidance and error correction in people with limited manual abilities; enable studies on static and dynamic multisensory conflict processing in healthy and neurologically impaired subjects; offer a framework for the investigation and treatment of phobic behavior involving a subject’s own hands (e.g., Chiraptophobia, Ablutomania and -phobia); simulate dynamic prism glasses; and develop better accessible or assistive user interfaces (UI). A more sophisticated system offering full control over all parameters of the perceived “self ” is what we are now about to conceive.

Manipulative AV approaches can also be applied during locomotion. In healthy people, while walking in the real world, sensory information from the vestibular, proprioceptive and visual systems, as well as efferent copy signals, produce consistent multisensory cues. But various impairments ranging from lower level perceptual to cognitive, can make it very difficult to maintain orientation and explore the world by walking naturally . It has been demonstrated that users tend to unwittingly compensate for small perceptual inconsistencies. For instance, manipulations applied to the view in an AV environment cause users to unknowingly compensate by repositioning and/or reorienting themselves. Since 2008, we have performed a series of experiments in which we analyzed how much users can be guided on a different path in the real world in comparison to the perceived path covered in the AV environment. In this context (see Figure 2) we found that an AV self-representation of the user, that is, a visual high-fidelity videobased blending, further improves the sense of presence during walking. Hence, Redirected Walking techniques provide an enormous potential for the diagnosis and treatment/rehabilitation of disorders affecting, for instance, the acquisition and maintenance of spatial maps, the production of locomotor commands, actual walking, and a broad diversity of related everyday cognitive activities.

In conclusion, we believe that the principles of MR have the potential to sustainably stimulate fruitful multidisciplinary research and to contribute to an even faster growth of innovative, future-oriented healthcare applications. We look forward to being a part of this endeavor.

Andreas Pusch, Ph.D. Frank Steinicke, Ph.D. Immersive Media Group Julius-Maximilians- Universität Würzburg Germany andreas.pusch@uni-wuerzburg.de

About Brenda Wiederhold

President of Virtual Reality Medical Institute (VRMI) in Brussels, Belgium.

Executive VP Virtual Reality Medical Center (VRMC), based in San Diego and Los Angeles, California.

CEO of Interactive Media Institute a 501c3 non-profit

Clinical Instructor in Department of Psychiatry at UCSD

Founder of CyberPsychology, CyberTherapy, & Social Networking Conference

Visiting Professor at Catholic University Milan.